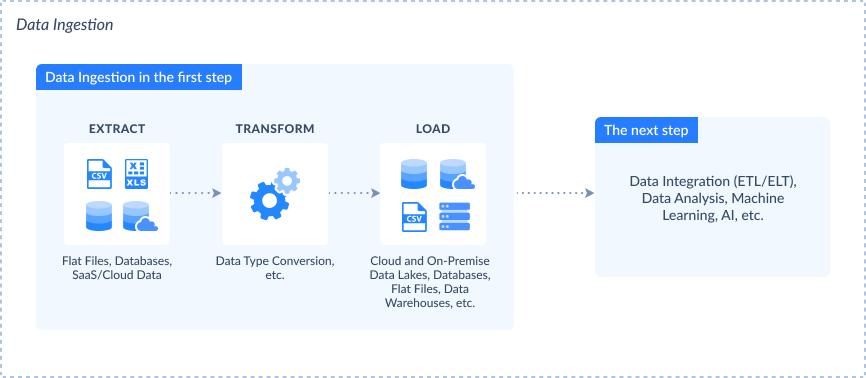

Let’s face it, data doesn’t just land where you want it. You need to pull it from the source, clean it up, and plug it into systems for further transformation. Data Ingestion is the process of importing structured and unstructured information from diverse sources like APIs, databases, cloud apps, and IoT devices into centralized storage systems like data warehouses, lakes, or lakehouses.

In plain terms, it means how raw data gets into shape to power analytics, reporting, and machine learning.

Data ingestion is vital for finance, healthcare, retail, logistics, telecom, and many other industries. It helps to get data fast and accurately, whether you're monitoring patient vitals in real time, syncing customer activity across touchpoints, or forecasting supply chain disruptions.

Today’s leading systems go beyond simple batch uploads. Hybrid approaches that combine real-time streaming and scheduled batch jobs are becoming the norm, especially with the rise of AI and ML, which often demand immediate updates. That shift pushes organizations to rethink how they handle data velocity, variety, and volume at scale.

In this guide, we’ll break down the types of data ingestion, where the data comes from, how the process unfolds step-by-step, and what challenges to watch for. We’ll also cover popular tools, highlight how to choose the right one for your setup, and best practices that work in the real world. Let’s get into it.

Why Data Ingestion Matters

Data ingestion is the critical first step in transforming raw, disparate data into actionable insights. It bridges the gap between fragmented records and a unified, reliable data foundation, enabling organizations to thrive in today’s dynamic digital landscape. Below, we explore why robust data ingestion is essential across industries and use cases.

Solving Core Data Challenges

A well-designed data ingestion process addresses several foundational obstacles:

- Breaking Down Data Silos

Businesses run on countless tools and platforms. Data ingestion consolidates disparate sources into a unified view, enabling cross-functional analysis and more accurate reporting.

- Reducing Latency in Insights

Outdated data leads to outdated decisions. Ingestion keeps dashboards, alerts, and forecasts current.

- Eliminating Manual Work

Manual data pulling is slow, error-prone, and unscalable. Automated ingestion reduces human effort and frees teams to focus on high-value tasks like model building and decision-making.

- Improving Data Quality from the Start

Ingestion workflows can include validation, cleansing, deduplication, and enrichment, helping ensure that only trustworthy, consistent data flows downstream.

Enabling Strategic Business Outcome

Adequate data ingestion serves as the gateway between raw records and business results:

- Enhanced Analytics:

Timely, accurate data lets teams uncover patterns and trends they can act on.

- Improved Efficiency:

Automation reduces manual work and helps teams to focus on building models, not babysitting pipelines.

- Better Compliance:

It supports data governance and keeps you aligned with regulations, especially as privacy laws evolve.

Supporting Critical Use Cases

Strong ingestion pipelines are the foundation behind critical real-time systems:

- Fraud Detection: Financial systems obtain and analyze transaction details instantly to catch suspicious activity as it happens.

- Dynamic Pricing: E-commerce and travel platforms rely on real-time ingestion to adjust prices based on market demand and customer behavior.

- Predictive Maintenance: In sectors like manufacturing, continuous ingestion from sensors helps spot issues before machines break down.

Built for Scale and Change

Effective data ingestion strategies can handle increasing data volumes, velocity, and variety, adapting to schema changes and business growth without compromising performance.

- ScalabilityWhether dealing with gigabytes or petabytes, the right ingestion strategy can grow with your business needs.

- h4 FlexibilityAs new data sources emerge and schemas evolve, ingestion pipelines must be able to adapt without disrupting operations.

Relevance across industries

Data ingestion is not confined to a single sector. Its benefits are widespread:

- Finance: Supports real-time risk assessment, fraud detection, and customer analytics.

- Healthcare: Enables up-to-date patient records, diagnostics, and population health insights.

- Retail: Facilitates personalized recommendations, demand forecasting, and inventory sync.

- Information Technology: Supports system monitoring, performance analytics, and service optimization.

Types of Data Ingestion

There are various options for moving records from a source to storage. The types of data ingestion you choose depend on velocity, business needs, and how fast you need insights.

Batch Ingestion

Batch ingestion collects and processes large volumes of data by scheduled intervals, typically hourly, nightly, or daily. It groups records together before transferring them into a destination like a data lake or warehouse.

- Best for: Traditional BI reports, historical trend analysis, and compliance archiving.

- Why it matters: This method is simple to implement, cost-effective, and easy to manage. It works well for static datasets or use cases where latency isn't critical. However, it’s not built for fast-changing environments that require up-to-the-minute data.

Real-Time (Streaming) Ingestion

Moves data continuously as it's generated in milliseconds to seconds. This method uses streaming platforms such as Apache Kafka or Amazon Kinesis to capture and transmit live data flows.

- Best for: Fraud detection, IoT telemetry, algorithmic trading, and instant personalization.

- Why it matters: It enables immediate insight and action but requires a more complex and scalable infrastructure to handle continuous input and ensure low-latency processing.

Micro-Batching

Micro-batching gathers small batches of data over very short intervals, typically every 15–60 seconds and then processes them as mini-batches.

- Best for: Log ingestion, clickstream analytics, user behavior tracking.

- Why it matters: This method reduces latency compared to traditional batch method and demands much less resource compared to full-scale real-time streaming. It is helpful when near-real-time insights are needed without building complex streaming architectures.

Hybrid Ingestion

Combines batch and real-time ingestion in a unified architecture. This method blends both batch and streaming methods into a single architecture often using patterns like Lambda or Kappa. It ingests and processes real-time data for immediate use, while also collecting it in batches for long-term storage and deeper analysis.

- Best for: Organizations that need both quick insights and in-depth historical analysis.

- Why it matters: Hybrid ingestion provides the flexibility to scale and adapt. It lets you act fast on fresh data while building a robust historical dataset for downstream reporting, modeling, or compliance.

| Type | Latency | Use Cases | Complexity |

|---|---|---|---|

| Batch | High (minutes–hours) | Reports, historical analytics | Low |

| Real-Time | Low (ms–seconds) | Fraud detection, real-time dashboards | High |

| Micro-Batching | Medium (seconds) | Log processing, user behavior | Moderate |

| Hybrid | Varies | Mixed-use (real-time + batch) | Moderate–High |

Different types of data ingestion exist because data itself is so varied. Some move fast, and some do not. The smartest pipelines blend the right approaches for the right workloads. The key is knowing what’s mission-critical right now and what can wait.

Common Data Ingestion Sources

Data ingestion starts with pulling records from the right sources, and those sources are as diverse as modern tech stacks. Here are the most common ones you'll encounter:

Databases

Relational (e.g., MySQL, PostgreSQL) and non-relational (e.g., MongoDB, Cassandra) databases store structured and semi-structured information across countless business systems. They are often the backbone of transactional systems like e-commerce platforms or CRMs.

SaaS Applications

Include popular cloud platforms like Salesforce, HubSpot, or QuickBooks to house customer, sales, and financial information. Importing from these ensures your internal analytics systems stay in sync with the apps your teams rely on daily.

Flat Files

Formats like CSV, Excel, or TSV remain widely used for ad hoc file sharing or historical imports. Often stored locally or in cloud drives, they’re commonly imported during migrations or audits.

Cloud Storage Services

Platforms like AWS S3, Azure Blob Storage, and Google Cloud Storage are used for large-scale file storage, backups, and data lakes. These are popular landing zones for raw ingestion before processing.

Streaming Sources

Systems like Apache Kafka and Amazon Kinesis enable real-time data collection. Ideal for use cases like fraud detection, live monitoring, or personalization engines.

IoT Devices and Sensors

Devices deployed in the field, such as smart meters, wearables, or vehicle trackers, generate continuous streams of telemetry. For example, IoT sensors in smart cities track traffic flow in real time, feeding into urban planning or emergency response systems.

Data Ingestion Process – Step-by-Step Overview

A well-orchestrated data ingestion pipeline is behind every fast dashboard, smart report, or real-time alert. It moves the right information, in the right way, to the right place.

Below, we concocted an eight-step process that most modern ingestion workflows follow:

Step 1: Identify Data Sources

Knowing your sources sets the foundation for designing the pipeline. These involve only the data sources that are relevant to current requirements. It can include SQL or NoSQL databases, cloud apps, flat files, IoT feeds, cloud storage, or streaming platforms.

Get the credentials to access them. Then, form the connection strings, file paths, URL of the APIs, or other ways to open each data source. Once connected to the sources, identify the tables or objects where the data resides.

Step 2: Select Ingestion Method

Choose how to get data based on how fast you need it:

- Batch ingestion loads data on scheduled intervals, ideal for periodic reporting.

- Real-time or streaming ingestion handles continuous data flows, supporting use cases like fraud detection or live monitoring.

- Hybrid ingestion mixes both approaches to fit varied sources and business needs.

Step 3: Choose the Right Data Ingestion Tool

Before moving forward, decide on the platform or tool that will power your ingestion. Your choice should align with your data sources, ingestion methods, transformation needs, scalability, and budget.

Whether you go with cloud-native services, open-source frameworks, or commercial ETL/ELT platforms, picking the right tool upfront sets you up for capable pipelines and easier maintenance.

Step 4: Extract Data

When the method and tool are defined, proceed to data extraction. It is vital first action to collect or copy data from your sources.

The goal here is to accurately retrieve all relevant data while minimizing impact on source systems. Sometimes, extracted data is temporarily held in staging areas before further processing.

Step 5: Validate and Clean

Never trust data blindly. Solid validation protects your pipeline from various issues. If you don’t want to pull garbage through the whole pipeline, then do at least the minimum clean-up:

- Remove duplicates

- Handle missing or inconsistent values

- Validate types and formats

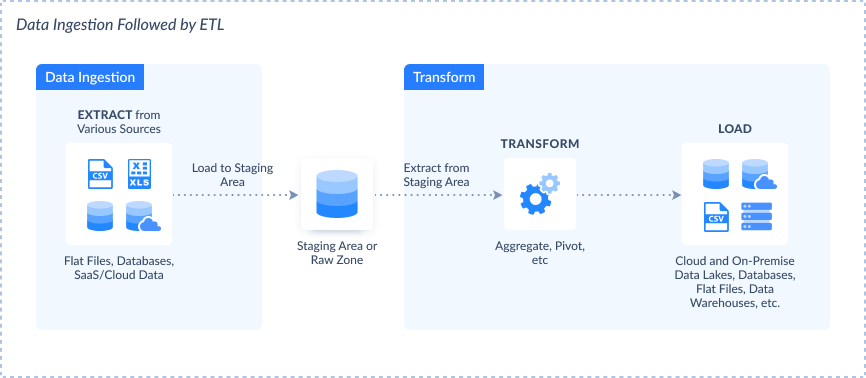

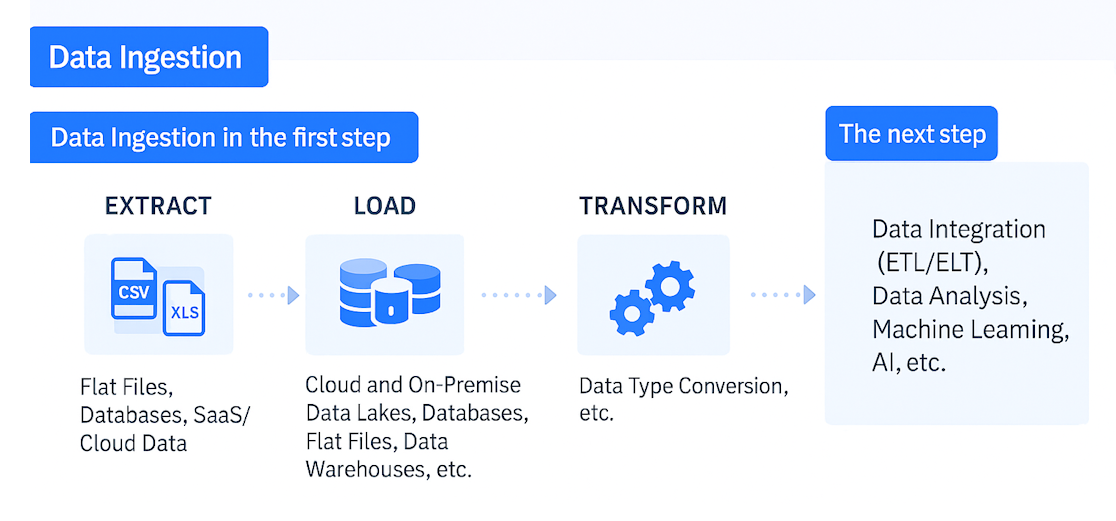

Step 6: Data Transformation (Optional but Common)

Depending on your destination and analysis goals, you might transform data by standardizing formats, deriving new fields, or enriching it with external sources. Some pipelines do this before loading (ETL), others after (ELT). Either way, transformation ensures data fits your business logic and reporting needs.

Step 7: Load Data into Destination

Load data to a data lake, warehouse, or lakehouse, like Snowflake, BigQuery, Redshift, or others. This step makes your data accessible for querying, visualization, and advanced analytics.

Step 8: Monitor and Maintain the Pipeline

Your pipeline job isn’t done once it’s built. Active monitoring means setting alerts for failures or unexpected data changes, logging performance to catch bottlenecks early, and regularly auditing schema or API updates. A well-maintained pipeline scales with your data and keeps insights flowing uninterrupted.

Key Data Ingestion Challenges (and Solutions)

It might seem clear at first glance: connect to the source, get the records, done. But in reality, several challenges lurk beneath the surface. They can disrupt pipelines, corrupt datasets, or even derail analytics initiatives if left unchecked.

Here are the most common challenges and solutions for staying ahead of them.

Inconsistent or Poor Data Quality

- Problem: Source may contain duplicates, missing values, typos, or mismatched formats, especially when pulling from multiple systems.

- Solution: Integrate validation and cleansing steps early in the pipeline. Use tools that enforce schema checks, deduplication, and profiling to maintain quality.

Performance Bottlenecks

- Problem: Inefficient ingestion methods will make the entire data processing effort finish late. It will have performance issues when data grows bigger. Other factors that will impact performance are slow network latency and resource contention.

- Solution: Take your time choosing the right method and planning. Carefully estimate all possible scenarios and potential development. Test your data pipeline's capacity.

Handling High Volume and Velocity

- Problem: Streaming millions of records per minute or batch processing large datasets can overload systems.

- Solution: Use scalable cloud platforms (like Snowflake, BigQuery, AWS Glue, or others) that auto-scale based on load. Implement message queuing systems like Kafka to buffer and balance spikes in real-time traffic.

Security and Compliance Risks

- Problem: Sensitive information (PII, financials, health records) moving across systems poses privacy and regulatory risks.

- Solution: Apply end-to-end encryption, use tokenization, and ensure GDPR/CCPA compliance. Masking and role-based access controls (RBAC) also help secure ingestion pipelines.

Schema Drift

- Problem: Changes in source schema (new columns, changed types) can silently break pipelines or corrupt target tables.

- Solution: Implement schema monitoring and automated alerts. Use platforms that offer schema evolution support or dynamic mappings to adapt safely.

Complex Integrations Across Diverse Sources

- Problem: Getting data from many apps, formats, and APIs can create a mess of custom code and manual steps.

- Solution: Use pre-built connectors and integration platforms (like Skyvia, Informatica, Fivetran, or others) to simplify onboarding of new sources and reduce engineering overhead.

Data Ingestion Tools and Technologies

There’s no single approach. Different tools and frameworks are built for different environments, skill sets, and business demands. Here’s how the landscape breaks down.

By Category

Open Source Tools

These tools give you full access to the source code, making them ideal for teams that want to customize, self-host, or fine-tune their pipelines. On the one hand, you get flexibility. On the other, the responsibility for setup, scaling, and support is also yours.

Proprietary Tools

Commercially developed platforms come with polished UIs, built-in connectors, and vendor support. They speed up deployment but may come with long-term licensing costs or vendor lock-in.

Cloud-Native Tools

These run directly in the cloud, offering plug-and-play scalability without the hassle of provisioning infrastructure. Great for teams that need to move fast or scale on demand.

On-Premises Tools

Ideal for organizations with strict governance or regulatory requirements, on-prem tools offer tighter control, but also require in-house hardware, maintenance, and IT support.

By Approach

Hand-Coded Pipelines

Fully custom, built from scratch. These pipelines offer precision and control but demand significant engineering effort and ongoing maintenance.

Prebuilt Connectors and Workflow Tools

Designed for rapid integration, these tools simplify setup through drag-and-drop interfaces or config-based pipelines. Sometimes they offer less flexibility when use cases get complex.

Data Integration Platforms

All-in-one platforms that handle ingestion, transformation, and delivery across environments. They reduce tool sprawl but may require expertise to configure and scale properly.

DataOps-Driven Ingestion

This mindset treats data ingestion as a collaborative, automated process. By bringing together engineering, analytics, and governance teams, DataOps helps streamline ingestion workflows and reduce bottlenecks.

Approach/Tool Comparison

| Approach/Tool Type | Flexibility | Ease of Use | Scalability | Best For |

|---|---|---|---|---|

| Open Source Tools | High (customizable) | Low (requires expertise) | Depends on infrastructure | Teams that need full control/custom logic |

| Proprietary Tools | Moderate (limited by vendor features) | High (user-friendly interfaces) | High (vendor-managed) | Quick deployments with vendor support |

| Cloud-Native Tools | Moderate to High (depends on service) | High (minimal setup) | High (auto-scaling) | Cloud-first organizations |

| On-Premises Tools | High (full control over environment) | Moderate (IT support needed) | Limited (hardware-bound) | Highly regulated environments |

| Hand-Coded Pipelines | Very High (fully custom) | Low (developer-driven) | Depends on design | Complex, tailor-made use cases |

| Prebuilt Connectors and Workflow Tools | Low to Moderate (predefined options) | High (designed for usability) | Moderate | Fast setup with basic needs |

| Data Integration Platforms | High (broad feature set) | Moderate (requires setup/config) | High | End-to-end pipeline orchestration |

| DataOps-Driven Ingestion | Moderate (automation + collaboration) | Moderate (process-driven setup) | High (automated pipeline scaling) | Teams focusing on efficiency and agility |

How to choose the right solution for data ingestion?

The best tools help organizations build resilient, intelligent pipelines that adapt to business needs. Choosing the right toolset is about balancing flexibility, control, and scalability while reducing the engineering effort to maintain workflows. Below, we list the features and capabilities inherent in the best tools on the market.

Multimodal Ingestion Capabilities

Modern platforms support batch and real-time streaming, often within the same architecture. This flexibility allows teams to handle structured and unstructured data from cloud, on-prem, and edge sources with equal ease.

- Schedule-driven batch processing for routine loads

- Real-time pipelines for low-latency updates (e.g., event streams, IoT feeds)

- Micro-batching options to balance speed and efficiency

Universal Connectivity

Effective ingestion tools come with a broad set of prebuilt connectors for seamless integration across:

- Relational and NoSQL databases

- Cloud storage systems

- SaaS applications and APIs

- Message queues and event brokers (Kafka, MQTT, etc.)

These connectors reduce the need for custom scripts and speed up integration with third-party systems.

Embedded Data Quality and Validation

Reliable pipelines ensure data is clean, consistent, and usable. Built-in quality features may include:

- Schema enforcement and anomaly detection

- Deduplication, null handling, and field mapping

- Real-time validation against data contracts

Governance, Security, and Compliance

Ingestion tools are increasingly responsible for enforcing privacy and security policies during movement:

- Encryption in motion and at rest

- Access control and identity management

- GDPR, HIPAA, and SOC 2 alignment features

- Audit trails for compliance verification

Automation and Pipeline Orchestration

Enterprise-scale ingestion often involves dozens of sources and complex workflows. Tools now include automation layers to:

- Orchestrate jobs with triggers, dependencies, and retries

- Monitor ingestion pipelines in real-time

- Automatically adapt to schema changes or source outages

Scalability and Deployment Flexibility

Whether deployed in the cloud, on-premises, or in hybrid setups, ingestion platforms must scale horizontally and handle volume bursts without manual intervention.

- Elastic cloud-native architecture

- Edge ingestion support for IoT and mobile devices

- Multi-region and cross-cloud support for distributed teams

Advanced Data Ingestion Use Cases

The use cases below highlight the critical role of data ingestion in driving innovation and efficiency across various sectors:

Real-Time Fraud Detection in Banking

Financial institutions combat fraud effectively. For instance, a leading U.S. bank implemented a sophisticated ingestion and mapping system to enhance its fraud detection capabilities. This practice results in significant financial savings and operational efficiency.

IoT Analytics in Smart Cities

Smart cities utilize IoT devices to collect vast amounts of information for urban management. Cities like London and Barcelona have implemented connected public transport systems and traffic monitoring solutions. These systems and solutions rely on real-time ingestion to optimize operations and improve citizen services.

Customer Onboarding in SaaS

SaaS companies are automating customer data ingestion to accelerate onboarding processes. Automated pipelines help organizations pull customer information fast, enhancing user experience and speeding up time-to-value.

AI Model Training and Personalization

Enterprises like Netflix employ data ingestion pipelines to feed machine learning models for content recommendation and personalization. These pipelines help process vast datasets, allowing for real-time content suggestions and improved user engagement.

Best Practices for Effective Data Ingestion

Following these best practices helps ensure your pipelines are efficient, resilient, and ready to scale.

Define Clear Objectives

Requirements and objectives should be clear from the start.

Tip: Align the types of data to ingest, its sources, and intended use to business goals.

Prioritize Data Quality Early

Data ingestion is not just copying records to a target location. The copy needs to be validated for accuracy, completeness, and consistency. The ingestion process should check for missing values, duplicate rows, and other quality checks.

Tip: Set up schema enforcement and notify teams of any schema drift.

Choose the Right Ingestion Method

Match your ingestion strategy to your use case. Batch jobs may be fine for daily reports, but real-time needs streaming.

Tip: Use micro-batching for near real-time performance with lower infrastructure costs.

Automate Wherever Possible

Manual data transfers don’t scale, and they’re prone to errors. Use workflow orchestration tools to automate ingestion jobs, schedule updates, and handle retries automatically.

Tip: Automate alerts for job failures, delays, and load mismatches.

Design for Scalability and Performance

Design the ingestion process to reduce latency and maximize throughput.

Tip: Use approaches like incremental updates, parallel processing, and compression techniques. Then, choose scalable architectures, distributed processing frameworks, and cloud-native services to handle large-scale ingestion.

Monitor Pipeline Health

Ongoing performance monitoring helps catch slowdowns, data loss, or broken connections before they impact the business.

Tip: Track metrics like throughput, latency, error rates, and source availability.

Documentation

Encourage knowledge sharing, ease troubleshooting, and address personnel movement through proper documentation. Catalog information about the data source, format, schema, lineage, and traceability to keep and share the internal expertise.

Documentation should include updated requirements, goals, objectives, metadata, and others. This will support ongoing maintenance, support, and enhancements.

Tip: Document data flows, dependencies, processing steps, and transformations for transparency and compliance.

Continuous Improvement

Evaluate and improve data ingestion processes based on feedback, performance metrics, and evolving business needs. Identify opportunities to improve the efficiency and effectiveness of the current ingestion processes.

Data Ingestion vs. Other Approaches

All the existing data processing approaches seem to be alike. However, each process serves distinct purposes and is much different from the others than it seems.

Data Ingestion

- Definition: The process of collecting and importing data from various sources into a storage system for further processing or analysis.

- Purpose: To gather raw data efficiently and reliably from diverse sources.

ETL (Extract, Transform, Load)

- Definition: A workflow that involves extraction from source systems, transformation into a suitable format, and loading it into a target system, typically a data warehouse.

- Purpose: To clean, enrich, and structure information before storage, ensuring it meets analytical requirements.

ELT (Extract, Load, Transform)

- Definition: A modern processing approach where data is first extracted and loaded into the target system, and then transformed within that system.

- Purpose: To leverage the processing power of modern data warehouses for transformation tasks, allowing for scalability and flexibility.

Data Integration

- Definition: The process of combining information from different sources to provide a unified view, often involving data ingestion, transformation, and loading.

- Purpose: To ensure consistency and accessibility of data across an organization, facilitating comprehensive analysis.

Comparison Table

| Aspect | Data Ingestion | ETL | ELT | Data Integration |

|---|---|---|---|---|

| Primary Function | Collect and import | Extract, transform, then load | Extract, load, then transform | Combine data from multiple sources |

| Transformation | Not involved | Performed before loading | Performed after loading | May involve transformation processes |

| Processing Location | Source or intermediary system | External processing engine | Target system (e.g., data warehouse) | Across various systems |

| Use Cases | Real-time collection, streaming | Data warehousing, compliance reporting | Big data analytics, cloud data lakes | Enterprise-wide data analysis |

| Latency | Low (real-time or near real-time) | Higher (due to transformation step) | Lower (transformation post-loading) | Varies depending on implementation |

| Flexibility | High (supports various data formats) | Moderate (structured data preferred) | High (handles structured/unstructured) | High (integrates diverse sources) |

| Complexity | Low to Moderate | High (due to transformation logic) | Moderate (leverages target system's power) | High (requires coordination across systems) |

6 Common Data Ingestion Myths (and the Truth Behind Them)

Misunderstandings on how it works can lead to delays, broken dashboards, or additional costs. Let’s reveal a few of the most common myths.

Myth 1: “Data Ingestion Is Just so Simple”

Fact: It might seem simple initially, but as volume grows, so do the challenges. You’ll face duplicate records, missing values, evolving schemas, and performance issues. Scaling takes planning, tools, and ongoing oversight.

Myth 2: “All Data Needs to be Ingested”

Fact: More information doesn’t always mean better results. Pulling in every available record can overwhelm your systems and cost you a fortune. Focus on what’s relevant and actionable for your business goals.

Myth 3: “Data Ingestion is a One-Time Activity”

Truth: Even with automation, ingestion isn’t a one-time action. APIs may break, schemas may change. Data quality issues may occur. Regular monitoring and tuning are essential to keep things running smoothly.

Myth 4: “Technology Solves Everything”

Truth: Even the best tools can’t fix a flawed strategy. Successful ingestion depends on clear goals, good governance, and collaboration between teams.

Myth 5: “Real-Time Ingestion Is Always Better”

Fact: Real-time sounds impressive, but it’s not always needed. It can be costly to maintain. If your reports run daily or weekly, batch ingestion might be more practical and affordable.

Myth 6: “You Need a Big Data Team to Do This Right”

Fact: While complex pipelines do require expertise, today’s tools have made ingestion far more accessible. Low-code platforms and prebuilt connectors let even small teams build solid pipelines without heavy engineering.

Conclusion

Data ingestion is the backbone of any modern pipeline. It gets records from scattered sources into a single place where it can deliver value.

Whether you're using batch, real-time, or hybrid ingestion, the key is aligning your method with your business goals.

Understanding the different types of data ingestion, tackling common challenges like quality and schema changes, and following best practices like automation and monitoring will help you build pipelines that are both resilient and scalable.

FAQ

- 1. What is data ingestion, and why is it important?

- Data ingestion is the process of collecting and importing data from various sources, such as databases, cloud apps, IoT devices, and flat files, into a central system like a data warehouse or data lake. It’s essential for enabling analytics, real-time decision-making, and machine learning by making raw data accessible and usable.

- 2. What are the different types of data ingestion?

- There are several core types: batch ingestion, real-time ingestion, micro-batching, and hybrid ingestion.

- 3. How does data ingestion differ from ETL or data integration?

- Data ingestion is about moving data from one or more sources into a destination system. ETL and ELT involve an additional transformation step. Data integration is a broader concept that includes ingestion, transformation, and unifying information across systems.

- 4. What are the most common challenges in data ingestion?

- Some of the biggest ingestion challenges include scalability, latency, data quality, and integration complexity.

- 5. How do I choose the right data ingestion tool?

- Look for tools that support both real-time and batch processing, offer automation and monitoring, work with required sources and formats, scale easily with your data volume, and provide a user-friendly interface or API access.

- 6. Can data ingestion be automated?

- Absolutely. Most modern platforms support automated pipelines triggered by schedules or real-time events. Automation reduces manual errors, speeds up data flow, and ensures pipelines run consistently, even across complex environments.