Most teams already have plenty of data. The real problem is that it's scattered across tools that don't talk to each other. An ETL pipeline exists to fix that. It pulls data from different sources, cleans and reshapes it, and delivers it to a place where it can be used. Done right, it turns pieces of information into something reliable, current, and ready for analysis.

In this guide, we'll break down what an ETL pipeline is, how it works, and why it matters. Expect honest advice on building workflows that won't embarrass you in three months and a tour of tools that aren't vaporware.

What Are ETL Pipelines?

An ETL pipeline is an automated process that extracts data from multiple sources, straightens out the mess, and loads it into your data warehouse or analytics system.

Every application in your company stores information differently because, apparently, software developers have never met each other. Left alone, data multiplies. The pipeline hauls it all out, fixes what's broken, and puts it somewhere your team can use with confidence.

It contains three parts: extract, transform, and load. Done.

Extract

Extraction is the moment data leaves its original home.

This step pulls raw data from sources like databases, cloud apps, files, or APIs. At this point, nothing is corrected or rearranged. The goal is simple: collect what exists, accurately and safely.

Extraction can happen in near real time, at regular intervals, or on demand. In many setups, only new or changed records are pulled to avoid unnecessary load.

The extracted data is usually placed into a temporary staging area before any processing begins.

Transform

Transformation is where order is created.

Data from different systems rarely lines up on its own. Formats don't match, values are inconsistent, and rules vary between tools. The transform step cleans things up and applies structure.

This stage is also where data stops being "copied" and starts being meaningful. Without transformation, analysis is unreliable, dashboards disagree, and trust erodes quickly.

Load

Loading is the final handoff.

After cleaning everything up, you drop it into the destination - warehouse, database, BI tool, whatever system needs feeding.

Loading happens either in big gulps on a schedule or constantly as new data arrives. It depends on:

- Whether your data changes every minute or sits pretty for days.

- Whether someone will panic if the numbers aren't bleeding-edge fresh.

Now it's usable. People can analyze it, build dashboards off it, and automate stuff with it.

ETL vs. ELT vs. Data Pipelines

"Data pipeline" is an umbrella term. It means about seventeen different things depending on whom you ask. Figuring out what everyone's talking about before you start carving saves you from buying the promising but wrong tools.

Clarity here doesn't just prevent architectural mistakes. It saves time, cost, and a lot of backtracking later. So, let's see where the boundaries lie.

Data Pipeline: The Bigger Picture

A data pipeline is any automated system that moves data from one place to another.

It might copy data as-is, stream events in real time, replicate records for backup, or trigger workflows across services. Some pipelines transform data along the way. Others don't touch it at all. Speed, reliability, and delivery are the main goals.

Examples include:

- Database replication

- Log or event streaming

- Raw data ingestion into a lake

- ELT workflows

- Simple app-to-app syncs

ETL Pipeline: The Specialized Refiner

An ETL pipeline is a type of data pipeline, built for a more deliberate purpose: preparing data for analysis.

ETL pipelines make sense when you care more about getting the data right than getting it right now: quarterly reports, compliance filings, executive dashboards, etc.

ELT Pipeline: The Modern, Cloud-Native Alternative

With ELT, you load the messy data first and shape it later, whenever the need arises (whether the whole dataset or separate parts are required at the moment). Transformation is now a data warehouse's responsibility because modern ones are fast enough to pull it off.

ELT's everywhere now in cloud setups where teams want flexibility and speed without worrying about outgrowing their infrastructure.

Key Differences at a Glance

| Criterion | ETL | ELT |

|---|---|---|

| Processing order | Transform before loading | Load first, transform in the destination |

| Data availability | Only after the transformations finish | Raw data available immediately |

| Scalability | Limited by pre-load processing power | Scales with cloud warehouse resources |

| Flexibility | Rigid; changes require pipeline updates | Flexible; adapts easily to new data |

| Latency | Higher | Lower |

| Data types | Mostly structured | Structured, semi-structured, unstructured |

| Compliance handling | Easier to mask or filter before load | Requires careful post-load controls |

| Storage usage | Stores only transformed data | Stores raw and transformed data |

| Maintenance effort | Higher due to multiple stages | Lower with cloud-native tooling |

| Typical environments | On-prem, legacy systems, regulated setups | Cloud-native analytics stacks |

| Common destinations | Data warehouses | Cloud warehouses and lakes |

| Popular use cases | BI, reporting, regulated analytics | Big data, analytics, ML, experimentation |

If everything gets labeled as a "pipeline," it's easy to expect one tool to handle ingestion, cleanup, compliance, analytics, and experimentation all at once. That usually ends in frustration.

Understanding the difference helps you be intentional:

- Data pipelines describe movement.

- ETL and ELT describe preparation.

- ETL vs. ELT determines where the work happens and how much flexibility you keep later.

Most mature setups use a mix of ETL consistency and ELT exploration possibilities, all tied together by broader data pipelines.

Once that’s clear, you stop doing everything the hard way, especially where "simple" is the most descriptive adjective possible, and forcing analytical workloads into pipelines that were never meant to support them.

Why Your Business Needs ETL Pipelines: Key Benefits and Use Cases

As businesses grow, data tends to spread faster than processes do. New tools appear, teams adopt their own systems and coping mechanisms, and before long, numbers don’t line up the way they should. ETL pipelines exist to bring order to that reality. Not as a technical luxury, but as infrastructure your business quietly depends on.

Better Data Quality and Consistency

Most data enters your systems in rough shape. Formats don’t match, fields are missing, and values contradict each other. An ETL pipeline standardizes this before it reaches reports or dashboards, taking away its chance to do harm to your workloads.

That includes tasks such as removing duplicates, standardizing formats, aligning fields to a common schema, combining datasets, or applying business rules that provide context for raw values.

The result is fewer corrections downstream and far less time spent questioning where a number came from.

Single Source of Truth

When each team looks at a different dataset, alignment becomes a ballpark figure. ETL brings together data from your CRM, finance systems, product tools, and ops into one spot – a single source of truth. And that’s a lot of different tools, each with its own approach to treat, store, and organize data.

Finally, teams can stop wasting time reconciling different versions and can make fast yet accurate decisions.

Operational Efficiency

Manual data handling has its place, but it doesn't survive growth. Automated pipelines replace recurring exports, copy-paste routines, and fragile scripts with repeatable processes.

Teams regain time (and what can be more precious?), reduce errors, and stop treating data preparation as a weekly task that interrupts real work.

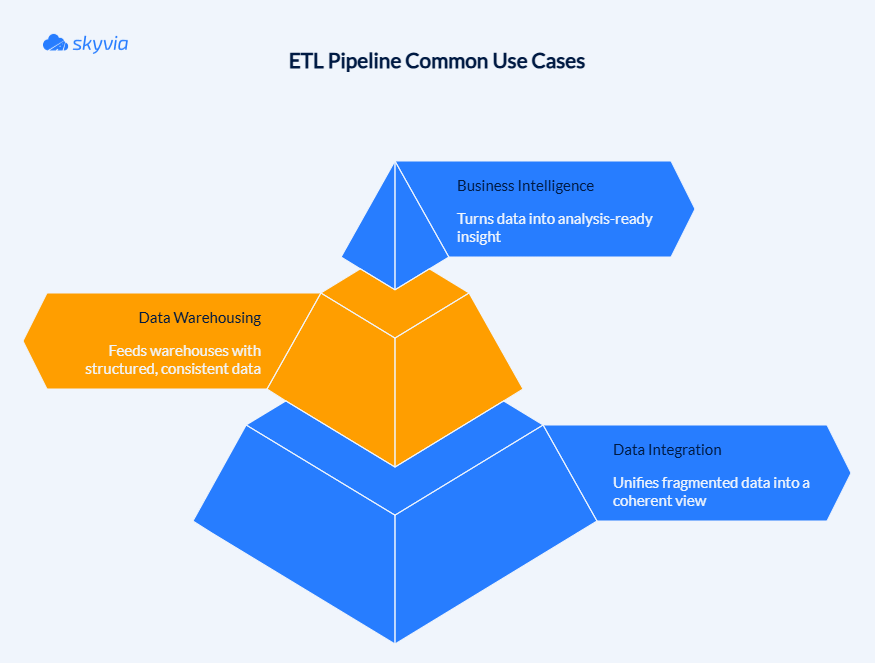

ETL Pipelines in Action: Common Use Cases

Business Intelligence and Analytics

ETL pipelines sit quietly behind most dashboards people trust. They pull data from tools like CRM systems, billing platforms, product databases, and logs, then clean and align it before anything reaches a chart. That’s how teams can compare customer behavior to revenue, track performance over time, or spot patterns without debating whose numbers are “right”.

In practice, ETL turns scattered operational data into something analytics teams can work with day after day.

Data Warehousing

A warehouse needs discipline, or it's worthless. ETL pipelines provide:

- Data extraction from operational systems

- Reshaping it so it all speaks the same language

- Loading it fast

Data Migration and Consolidation

When systems change, data has to move without breaking business processes. When it’s time to abandon that creaky old system everyone’s terrified to touch, ETL pipelines handle the breakup.

They also fix the mess where every department and geography has been running their own parallel universe of data. It’s data consolidation in the truest sense – merge it so you’re operating as one company instead of a confederation of confused states.

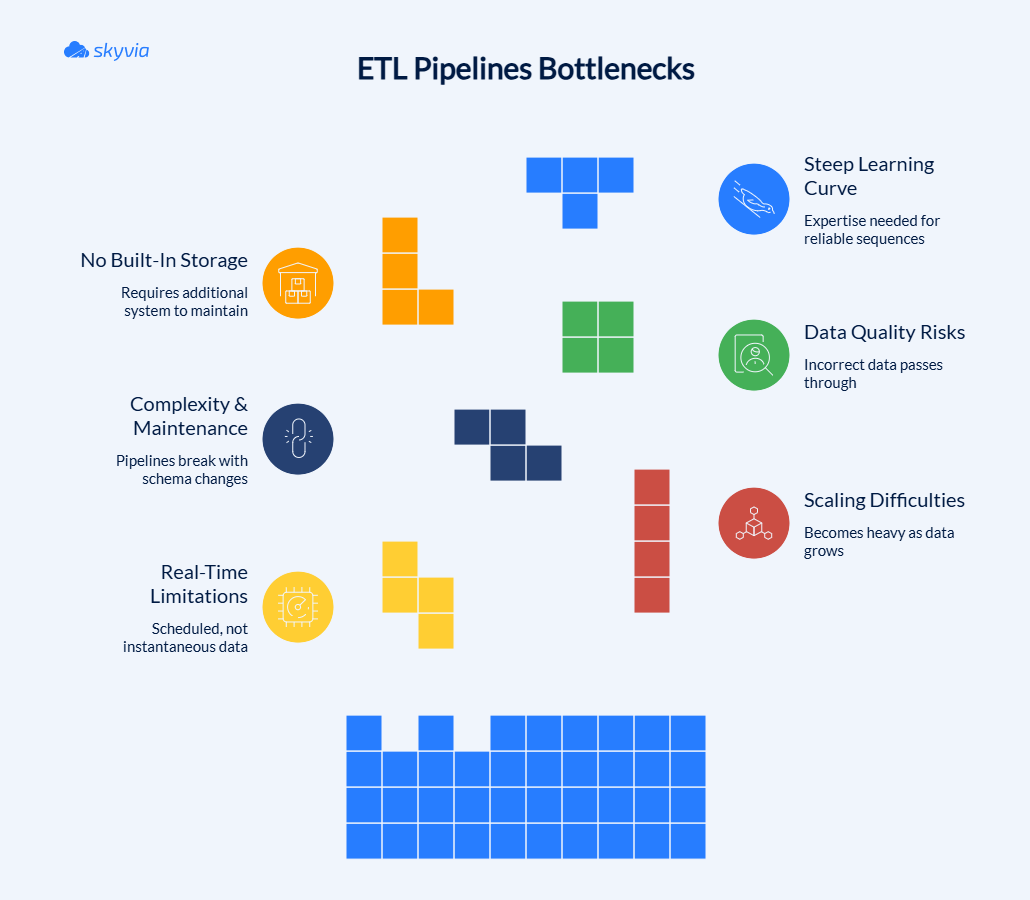

Key Disadvantages of ETL Pipelines

ETL pipelines are solid and dependable, which is exactly why they can be inflexible. They work best when data moves in predictable patterns. Once things start shifting faster, growing wider, or changing shape, some cracks begin to show.

Not Built for Real-Time Needs

Most ETL pipelines move data on a schedule. That’s fine for daily reports or overnight processing, but less ideal when teams expect numbers to reflect what just happened. There’s usually a delay baked in, even if everything is working perfectly.

Scaling Pains

As data volumes increase or new sources are added, ETL setups tend to grow heavier. More steps, more resource tuning, more cost decisions. It’s possible to scale, but rarely as easily as adding more resources. Usually means going back and redesigning pieces.

Complexity and Maintenance

ETL pipelines depend on upstream systems behaving the way they used to. When schemas shift, APIs change, or fields appear and disappear, pipelines need attention. Someone has to notice, investigate, and fix things before downstream data gets affected.

Data Quality and Brittleness

ETL logic assumes a certain structure. When that structure changes unexpectedly, pipelines might not take this with dignity. They can break or quietly pass along incorrect data. Catching these issues early requires solid monitoring and regular validation.

No Built-In Storage

ETL prepares data, but it doesn’t give it a permanent home. You still need a data warehouse or lake to land the results, which adds another system to manage and another cost center to plan for.

Steep Learning Curve

Visual builders are nice, but that doesn’t mean you don’t have to understand why your customer table needs to load before your orders table. ETL pipelines are dependable when the environment is stable and the rules are clear. They just aren’t effortless once complexity, scale, or speed enters the picture. Knowing that upfront makes them far easier to use well.

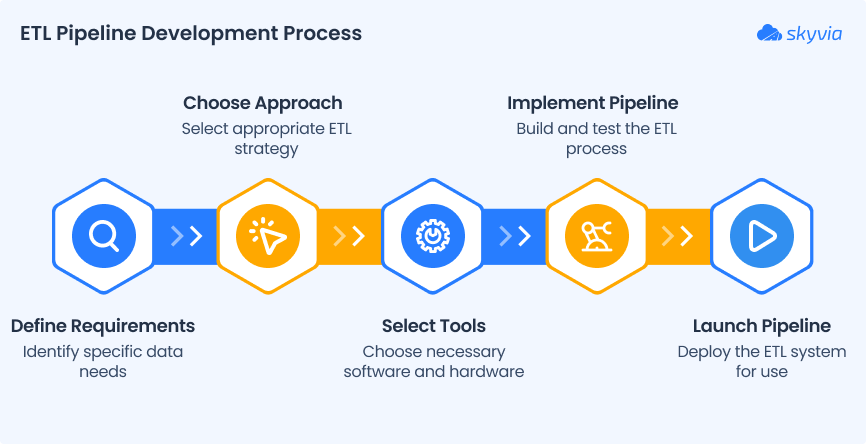

Building Your First ETL Pipeline: A Step-by-Step Guide

An ETL pipeline doesn’t need to be elegant on day one. It needs to be useful, understandable, and stable enough that people trust the data coming out of it.

The steps we offer below aren’t about perfection. There will be time for it when everything is set up and running. They are about avoiding the familiar disappointments that happen when teams get too tool-happy before doing the boring part of figuring out requirements.

Step 1: Define Requirements

That is the part that doesn’t get anyone excited, and blowing past it is how you create problems you’ll be fixing later.

Start by forgetting tools entirely, for a moment, of course. Ask what the business needs from this pipeline:

- Is it feeding dashboards?

- Powering reports for finance?

- Keeping systems in sync?

Different answers lead to very different designs.

Then get specific:

- Which systems matter here?

- How outdated can the numbers get before your stakeholders lose their minds?

- What’s happening when the whole thing implodes overnight, and you find out about it first thing in the morning?

- Who should see this data, and who better not lay an eye on it?

If there’s any chance compliance will rear its head later, deal with it now instead of during some nightmare audit.

Step 2: Choose Your Approach

There’s no universally “best” way to build ETL. There’s only what fits your team.

- Hand-built pipelines give total control, but they also lock you into maintaining scripts, connectors, dependencies, and edge cases. And when excitement wears off, this might disrupt the workflow for anyone involved. These tasks are fine if you have engineers and time. It’s what many teams don’t.

- On-prem tools make sense in regulated environments or when data can’t leave your infrastructure, but they come with real operational weight.

- Cloud ETL and iPaaS platforms give up a bit of control in exchange for not losing your mind, which is perfect for teams that need to ship things and adapt quickly. Tools like Skyvia exist exactly for this middle ground. No servers to maintain, drag-and-drop instead of Frankensteining scripts together, and monitoring that exists.

Pick the approach your future self won’t curse you for, not something that looks cool at the moment.

Step 3: Design and Develop

This phase is more about work than theorizing, yet your understanding and wittiness will come in handy like never before.

- Map the pipeline from start to finish before building anything.Keep the flow readable. Someone else should be able to open it and understand what’s happening without a guided tour.

- Build in small pieces. Load a subset of data, apply one transformation at a time, and confirm assumptions early. Pipelines that grow organically tend to survive longer than those built “complete” in one exhausting sprint.

- Design with change in mind because it's already waiting around the corner. Sources will change. Fields will disappear. Logic will evolve. And your pipelines have to be ready to face these challenges.

Step 4: Test and Validate

Many cut this corner, thinking they’ll come back to it later. Spoiler: they rarely do, and it becomes a problem at the worst possible time.

A pipeline that executes without errors can still be completely wrong.

- Test whether the data makes sense.

- Spot-check records.

- Compare totals.

- Look for gaps, duplicates, and weird formatting that only shows up in real usage.

Also, test failure.

- What happens if a source is empty?

- If credentials expire?

- If today’s data arrives late?

A pipeline that fails loudly and predictably is far better than one that “sort of” succeeds but brings surprises along.

Once people start relying on the output, trust becomes the real currency. Testing is how you earn that trust.

Build a pipeline you can read without your eyebrows going all the way up, something that runs the same way twice, and something you can adjust without accidentally breaking twelve other things. Those fundamentals matter infinitely more than clever architecture.

Best ETL Pipeline Tools for 2026

Not every ETL tool is trying to solve the same problem, even if the landing pages say otherwise. Some platforms want to disappear into the background and just move data. Others want to become the place where your entire data operation lives. And a few are clearly built for teams who prefer sharp tools over safety rails.

Ranking tools numerically misses the point entirely. The real question is what kind of work each one is built for. Sort by that and you’ll understand your options.

Cloud-Based / No-Code Platforms

Here, you sacrifice some infrastructure flexibility, but in exchange, you get a system that doesn't punish you for not being a DevOps wizard. Forget about servers. You don’t wire up schedulers. You just focus on what moves, when it moves, and what shape it should land in.

Skyvia

Skyvia is a platform that expects your requirements to change at any stage. You can start with a simple import, layer in transformations, add scheduling, then eventually stitch together multi-step workflows without being pushed into a new product or pricing cliff.

With 200+ connectors and a free tier, you can start building your pipeline empire the same day you realize you need one.

When your needs become advanced, you can try Data Flow and Control Flow. They need a bit more determination and internal understanding, but remain simple to navigate nevertheless.

It’s especially comfortable for teams that want control without turning data integration into a full-time engineering project.

Hevo Data

Hevo is for teams that want pipelines up and running fast and don’t want to watch them afterward. You connect sources, choose a destination, define transformations if needed, and let it run.

It works well in analytics-first setups where reliability matters more than deep customization. You get flexibility through Python when required, but the platform clearly prioritizes ease and low maintenance over fine-grained control.

Integrate.io

Integrate.io is what teams reach for when pipelines stop being simple. Branching logic, CDC, compliance requirements, and near-real-time syncs are part of the baseline here.

There’s more structure, more knobs, and more responsibility. That makes it powerful, but also heavier. It fits organizations that already treat data pipelines as core infrastructure rather than supporting tooling.

Fivetran

Fivetran’s pitch is simple: set it up once and stop thinking about it. It handles schema changes quietly, keeps connectors updated, and pushes data into your warehouse on schedule.

There’s very little design work involved, by intention. If your transformations live in dbt and your main concern is dependable ingestion at scale, this approach makes sense. Costs need attention as volumes grow, but operationally, it stays calm.

Matillion

Matillion allows you to connect to different data sources and load their data into a data warehouse while applying different data transformations.

It offers both a simple GUI for configuring ETL pipelines and lets you write Python code for advanced cases.

Matillion supports over 150 connectors and has a free tier that includes 500 complimentary Matillion Credits. This trial grants access to the full Enterprise edition features of the Data Productivity Cloud.

Rivery

Rivery sits somewhere between structured ETL and workflow automation. It offers strong orchestration, ready-made templates, and enough flexibility to support both ETL and reverse ETL without friction.

Teams that want a managed platform but still need API access and customization tend to land here. It feels less opinionated than some competitors, which can be either a benefit or a downside depending on how decisive your data strategy already is.

Open-Source Frameworks & Tools

These are not about speed to the first pipeline. They’re about control, extensibility, and living close to the metal.

Apache Spark

Spark is a processing engine. It thrives on volume, complexity, and distributed workloads - and expects you to know what you’re doing.

If your data challenges are genuinely large-scale or computationally heavy, Spark delivers. Just be ready to invest in engineering time, monitoring, and ongoing maintenance.

Meltano

Meltano appeals to teams that want transparency and modularity. It embraces plugins, version control, and automation, and fits neatly into modern DataOps practices.

There’s no abstraction pretending this is for everyone. Meltano rewards teams that are comfortable with code, tooling, and ownership of the full pipeline lifecycle.

Talend Open Studio

Talend Open Studio is a visual design sitting on top of code you can actually see and modify. Teams pick it when they want something straightforward today but don't trust cloud vendors not to pull the rug out, whether that's pricing, features, or just deciding your use case isn't profitable enough anymore. Managing it yourself is work, but you're not beholden to someone else's quarterly earnings call.

Pentaho Data Integration

.png)

Pentaho merges point-and-click pipelines with code when you need it, plus components you can recycle. It’s refreshingly boring in a good way, and thrives in places where half the team codes and half the team doesn’t.

The community edition covers a lot of ground, while paid options add support and enterprise safeguards.

Best Practices for Robust and Scalable ETL Pipelines

These practices focus on keeping pipelines dependable when reality gets messy.

Implement Incremental Loading

Processing the full dataset repeatedly is expensive repetition.Incremental loading isolates what’s moved, new rows, updated fields, and deletions. Then comes Change Data Capture. It nails this by monitoring database transaction logs without adding load to source systems. If CDC’s not in play, timestamp filters or sequential IDs work, provided you account for delayed records and time zone weirdness.

The reliable pattern would be:

- Initial full load for the foundation.

- Incremental updates for ongoing runs.

- Periodic reconciliation passes to spot anomalies early.

Best Practices for Logging and Monitoring

Pipelines don’t fail loudly by default. They whisper, then shrug, then keep going with bad data. Logging and monitoring are how you hear that careless whisper.

Logs are your paper trail: what kicked off, which sources fed the pipeline, row counts that moved, and anomalies worth noting. Metrics should chart trajectories, not merely flash warnings when thresholds get crossed. Latency creeping up or row counts slowly shrinking matter just as much as outright failures.The best setups also watch business signals. If daily revenue drops to zero or order counts spike unexpectedly, the pipeline should raise a hand before a human does.

How to Scale an ETL Pipeline

Scaling works best when it’s boring. Modular design helps here. Treat extraction, transformation, and loading as independent stages so each can grow without dragging the others along. Parallelism is your friend. Slice work by time, region, or entity and process in chunks. Push filtering and aggregation upstream to cut volume early. When traffic spikes, queues and staging storage smooth things out instead of letting systems tip over.Most importantly, profile real runs. The slow parts are rarely where diagrams suggest they’ll be.

Ensure Data Quality and Security

Fast pipelines are useless if the output can’t be trusted. Quality checks belong in the pipeline itself, not in the autopsy after things break.Validate schemas, enforce constraints, and watch for gradual shifts in distributions. When data doesn’t pass, stop it or quarantine it. Quiet failures are worse than loud ones.

Security follows the same philosophy:- Encrypt by default.

- Keep access permissions tight enough to make people justify their requests.

- Cycle credentials regularly.

- Preserve lineage so you can reconstruct the path when someone doubts your data at the worst possible moment.

Use Version Control and Documentation

Pipelines evolve. Problems start when changes aren’t visible.

Think of pipelines as deployable software:version them properly, test before release, push changes with care. Reverting to a known good state in seconds is vastly preferable to reconstructing last week’s modifications from foggy recollections and git blame. Strong ETL pipelines aren’t built by stacking features. They’re built by respecting how data behaves over time: slowly, unpredictably, and usually under pressure. When the fundamentals are right, growth feels manageable instead of stressful.Conclusion

If you’ve made it this far, you’ve covered the full arc: what an ETL pipeline actually is, how each stage fits together, where ETL stands compared to ELT and other data pipelines, how to build one that won’t collapse under real-world pressure, and the practices that keep it healthy as your business grows. The throughline is simple: when data moves cleanly and consistently, every team moves faster.

The world around these pipelines is changing fast. More teams want data the moment it’s generated. Cloud warehouses are strong enough to handle transformations at scale, pushing ELT into the mainstream. And AI’s taking on support roles: aligning data fields, detecting irregularities, enabling teams to monitor pipelines without constantly hovering.

The underlying mechanics haven’t shifted, but momentum’s clearly moving toward accelerated cycles, malleable infrastructure, and autonomous workflows.

Skyvia meets teams at any maturity level – brand new to data pipelines or knee-deep in connectors. It maintains a visual, friendly approach and can expand smoothly when needed. Additionally, it provides scheduling and monitoring tools that become critical once workflows grow. Simple when you want simple, configurable when you don’t.

Enough reading, time to build something. Try Skyvia and find out what happens when your data pipelines stop requiring constant attention because the platform does what it’s supposed to.

FAQ

What is the difference between a data pipeline and an ETL pipeline?A data pipeline is any process that moves data between systems, with or without transformation. An ETL pipeline specifically extracts, transforms, and loads data into a target system for analysis.

Can I build an ETL pipeline without coding?Yes! Modern no-code/low-code ETL tools like Skyvia let you design, automate, and manage ETL pipelines using visual interfaces with no programming required.

How often should ETL pipelines run?ETL pipelines can run on schedules (hourly, daily, etc.) or in real-time, depending on business needs and data freshness requirements.

Is ETL still relevant with data lakes and ELT?Absolutely. ETL remains vital for data cleansing, compliance, and structured analytics, even as ELT and data lakes expand options for modern data architectures.