Change data capture is the process of tracking and identifying changes to data so that other systems can make use of the changes. Instead of scanning entire datasets every time, CDC records only the inserts, updates, and deletes.

CDC helps keep data in sync between systems and keep them up to date without digging through everything in the data repeatedly.

This article will give you the importance of CDC, how it works, the different CDC methods, and the tools you need.

Let's begin.

Why Change Data Capture Matters

Change Data Capture matters because it makes handling data changes smoother and more efficient.

Consider the benefits of CDC and its application to other data management systems.

Benefits of CDC

-

Efficiency: CDC reduces the need for full database scans. It tracks only the changes so it saves time and resources.

-

Real-time Data: It enables real-time data replication, so all systems are up-to-date instantly.

-

Lower Latency: By capturing changes as they happen, CDC reduces the delay in data processing, making systems more responsive.

-

Audit Trails: CDC provides a historical record of changes, so auditing and tracking becomes easier.

-

Scalability: It helps systems scale by reducing the load on databases and networks. It also makes it easier to handle large volumes of data.

Applications of CDC in Other Data Management Systems

-

Data Warehousing: CDC helps keep data warehouses updated with the latest information from operational databases without reloading everything.

-

ETL Processes: In Extract, Transform, Load (ETL) processes, CDC helps move only the changed data, improving efficiency.

-

Real-time Analytics: Companies use CDC to feed real-time data into analytics platforms. This ensures they always have the latest insights.

-

Microservices: CDC can help microservices stay in sync by propagating data changes across different services.

-

Data Replication: It's often used to replicate data across different systems. This ensures consistency without overwhelming resources.

-

Real-time Analytics: Companies use CDC to feed real-time data into analytics platforms. This ensures they always have the latest insights.

How Change Data Capture Works

CDC works by monitoring and capturing inserts, updates, and deletes to your data. Then, making these changes available for further processing, replication, or synchronization. Here's a detailed breakdown of how CDC works and the components involved.

-

Monitoring Changes:

CDC keeps an eye on the database for any data modifications. It does it by tracking changes from any of the following:

- Transaction log

- Row-level timestamps

- Database Snapshots

- Actions from database triggers

Some databases like Microsoft SQL Server natively support CDC. So, it doesn’t need a complex script to scan for changes.

-

Capturing Changes:

When a change occurs, CDC captures the relevant data, like the old and new values, the table affected, and the time of the change.

-

Storing Changes:

CDC stores the captured changes in a format that's easy to consume, often in change tables within the same database or a separate storage system. These change tables or streams contain metadata like the type of operation (insert, update, delete), the affected columns, and timestamps.

-

Consuming Changes:

Applications or processes can subscribe to these change logs or streams to receive updates. This might involve an ETL process, data replication system, or any consumer interested in reacting to these changes.

Consumers might transform the data, send it to another system, or trigger specific actions based on the change.

-

Applying Changes:

The captured and transformed changes can be applied to target systems, like a data warehouse, another database, or a real-time analytics platform. In real-time systems, these changes are applied almost instantly to keep all systems in sync and data that is always fresh.

Components Needed for CDC

-

Source Database:

The database where changes occur. This is typically a relational database (e.g., SQL Server, MySQL, PostgreSQL) with tables that hold the data of interest. But, it can also be the data of cloud apps like Salesforce.

-

Transaction Log:

A log file where the database records all the changes made to its data. CDC often hooks into this log to capture changes without affecting the performance of the main database. Note that transaction logs become a required component when the method used is log-based CDC (explained later).

-

CDC Engine:

A software component built into the database or as an external tool. It monitors and captures the changes. It handles the extraction of changes, their formatting, and storage into change tables or a change stream.

-

Change Tables/Streams:

These are special tables or streams that store the captured changes. They act as a repository where other systems or processes can pull the changes.

These tables often include additional metadata, like operation type, timestamps, and transaction IDs.

-

Consumers:

Applications, ETL processes, or other systems that consume the changes. They read from the change tables or streams to apply the changes to another system or trigger further processing.

-

Transformation and Load Tools:

Tools or scripts that transform the captured changes into a format that fits the target system. For example, transforming row data for a data warehouse schema.

These tools also handle the loading of transformed data into the target system.

-

Target System:

The destination where the changes are applied. This could be another database, a data warehouse, a real-time analytics platform, or even a microservice that needs to respond to data changes.

CDC Workflow Example

Below is how CDC will work in a step-by-step fashion when a user updates a customer record:

-

Data Change: A user updates a customer record in a database by adding an email and mobile number.

-

Log Entry: The database records this update in the transaction log.

-

CDC Engine: The CDC engine reads the transaction log, captures the new data (email and mobile number), and writes it to the change table.

-

Consumer Access: An ETL process reads the change from the change table.

-

Transformation: The ETL process transforms the data as needed for the data warehouse.

-

Apply Changes: The transformed data loads into the data warehouse for analysis and reporting.

CDC is the silent orchestrator that ensures data flows smoothly from the source to wherever it's needed, keeping everything up-to-date with minimal delay and maximum efficiency.

Change Data Capture Methods

There are several methods for implementing CDC. Each method has its strengths and weaknesses. Here’s an overview of the main CDC methods:

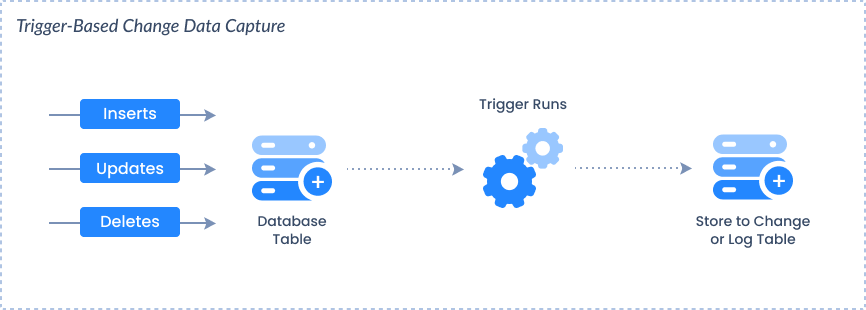

1. Trigger-Based CDC

How It Works:

-

Uses database triggers, which are procedures that automatically execute in response to specific events on a table (e.g., INSERT, UPDATE, DELETE). This one requires SQL coding.

-

When a data modification occurs, the trigger captures the change and records it in a change table or log table. The SQL code within the trigger will handle the storage to change tables.

Advantages:

-

Highly customizable: You can define specific logic for each change event.

-

Immediate capture of changes: As triggers fire in response to events, changes are captured in real time.

Disadvantages:

-

Performance impact: Triggers can add overhead to transaction processing, especially in high-volume environments.

-

Complexity: Managing and maintaining triggers can become complex, especially when dealing with multiple tables or complex business logic.

-

Requires SQL Coding Skills: Triggers are created with SQL code, not with a graphical drag-and-drop fashion.

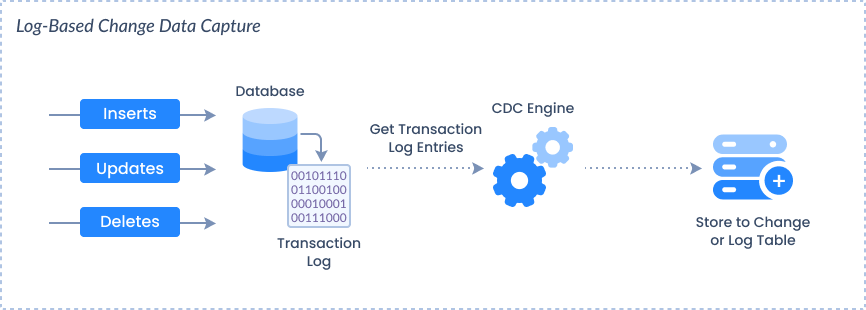

2. Log-Based CDC

How It Works:

-

Reads the database's transaction logs (e.g., SQL Server’s transaction log, MySQL’s binary log) to capture changes.

-

Transaction logs record every change made to the database, so log-based CDC can capture these changes without directly impacting the performance of the source system.

Advantages:

-

Minimal performance impact: As it reads from transaction logs, it doesn't interfere with the main database operations.

-

Comprehensive capture: Captures all changes, including those made outside of regular transactions (e.g., bulk operations).

Disadvantages:

-

Complexity: Requires understanding of the database's logging mechanisms. Use a CDC tool to address this problem.

-

Latency: There can be a slight delay when a change occurs and when it's captured. It depends on how frequently the log is read.

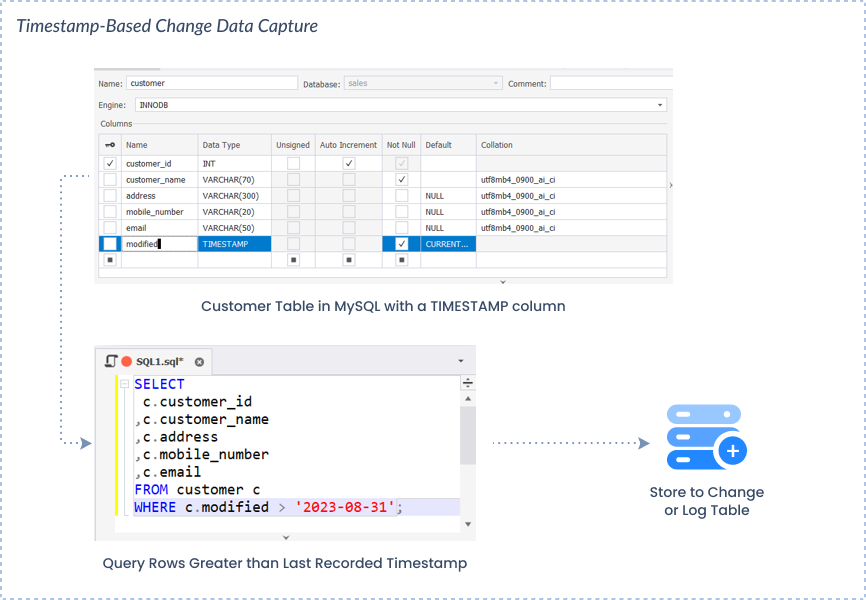

3. Timestamp-Based CDC

How It Works:

-

Relies on a timestamp column in the source tables that records the last modification time of each row. See a MySQL table sample above.

-

The CDC process queries the table for rows where the timestamp is greater than the last recorded timestamp, indicating that the row has changed since the last capture.

Advantages:

-

Simplicity: Easy to implement if the source tables already include a timestamp column.

-

Low overhead: Since it only involves querying for changes based on timestamps, it's light on system resources.

Disadvantages:

-

Requires schema modification: If the source tables don't have a timestamp column, one must be added. This might affect any front-end application's logic.

-

Can miss changes: If a row's timestamp isn't updated correctly or if multiple changes happen within the same time interval, changes can be missed.

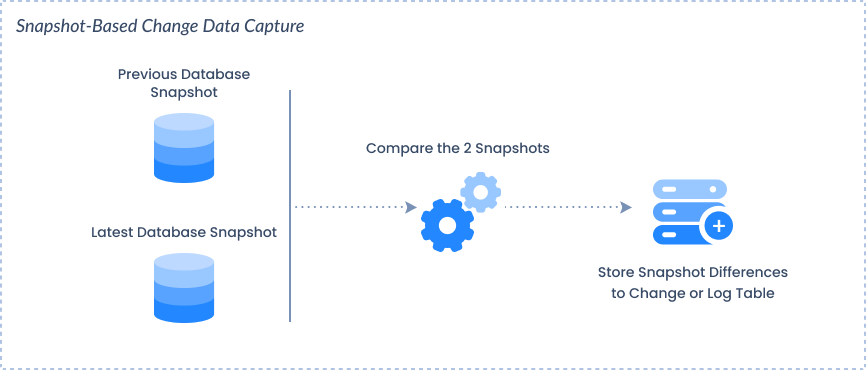

4. Snapshot-Based CDC

How It Works:

-

Periodically takes snapshots of the entire table and compares them with previous snapshots to identify changes.

-

The differences between snapshots indicate the changes (inserts, updates, deletes).

Advantages:

-

Simple to implement: No need for triggers, logs, or timestamps.

-

Captures all changes: Every change is captured during the comparison of snapshots.

Disadvantages:

-

High resource consumption: Requires significant processing power and storage, especially for large tables.

-

Not real-time: Changes are only captured during the periodic snapshots, so there’s a delay.

4. Hybrid CDC

How It Works:

-

Combines different CDC methods to optimize performance and ensure comprehensive change capture.

-

For example, using log-based CDC for most changes but applying triggers for specific critical tables or operations.

Advantages:

-

Flexibility: Allows for fine-tuning of the CDC process based on specific needs and workloads.

-

Resilience: Can provide fallback options if one method fails or misses changes.

Disadvantages:

-

Complexity: Managing a hybrid CDC approach can be complex and requires deep expertise.

-

Maintenance: Requires ongoing maintenance to ensure all components are working together seamlessly.

Choosing the Right Method

The choice of a CDC method depends on various factors like performance requirements, system complexity, data volume, and specific use cases. Log-based CDC is generally preferred for its minimal performance impact and comprehensive capture capabilities. But in some scenarios, trigger-based or timestamp-based CDC might be a better fit, especially if real-time capture or simple implementation is a priority.

Change Data Capture in ETL

Extract, Transform, Load (ETL) is a data integration technique to move data from various sources into a specific target repository. CDC complements ETL processes to make them more efficient and faster.

This section will differentiate ETL processes with and without CDC so that the benefits of CDC will surface even more.

ETL Without Change Data Capture

-

Extract:

-

Full Data Extraction: Without CDC, the ETL process often extracts entire datasets from the source system, regardless of whether data has changed. This is done at regular intervals in batches.

-

High Load: Extracting full datasets is heavy and can lead to performance issues, especially with large datasets. It gets slower as the data grows bigger.

-

Longer Processing Time: Since all data is extracted, the processing time is longer. So it leads to delays in getting updated information to the target system. There is also the danger of overlapping the next ETL run with the previous schedule that has not finished yet.

-

High Latency: The time lag between extractions means that the target system is not always up-to-date, which is problematic for real-time applications.

-

-

Transform:

-

Bulk Transformation: All extracted data undergoes transformation, whether it’s new, modified, or unchanged.

-

Resource Intensive: Transforming large volumes of data requires significant computational resources and time.

-

-

Load:

-

Full Reload: The entire dataset is often reloaded into the target system, overwriting existing data.

-

Data Redundancy: This can result in redundant operations, where unchanged data is reprocessed and reloaded.

-

Delayed Updates: The data in the target system may be out of sync with the source system. So it leads to potential inaccuracies in real-time analysis.

-

ETL With Change Data Capture

-

Extract:

-

Incremental Data Extraction: CDC extracts only the changes (inserts, updates, deletes) that have occurred since the last ETL run.

-

Lower Load: The extraction process is lighter on the source system since only a small subset of data is pulled.

-

Faster Processing Time: With less data to extract, the process is faster. So it leads to quicker updates in the target system and allows more frequent runs.

-

Real-Time or Near Real-Time Processing: CDC captures changes as they happen and makes them available immediately. This allows for near real-time data extraction.

-

-

Transform:

-

Targeted Transformation: Transforms only the changed data, which reduces the computational load.

-

Efficiency: Transforming a smaller subset of data is faster and less resource-intensive, improving overall ETL efficiency.

-

-

Load:

-

Incremental Load: Only the transformed changes are loaded into the target system so it updates only the necessary parts.

-

Reduced Redundancy: This reduces unnecessary operations and updates only the relevant data. So, it maintains the integrity and performance of the target system.

-

High Data Freshness: The target system stays in sync with the source, enabling near real-time decision-making and analysis.

-

Popular Tools for Change Data Capture

There are tools specifically for Change Data Capture. However, there are data integration tools that harness CDC.

The following are some of them:

Debezium

Overview

Debezium is an open-source CDC tool that captures and streams database changes in real time. It's built on top of Apache Kafka and works with various databases like MySQL, PostgreSQL, SQL Server, Oracle, and MongoDB. It can’t deal with cloud apps like Salesforce though.

Features

-

Real-Time Change Capture: Streams changes as they happen.

-

Kafka Integration: Natively integrates with Kafka for distributed streaming.

-

Schema Evolution: Tracks schema changes over time.

-

Multi-Database Support: Works with many popular relational and NoSQL databases.

-

Community-Driven: Active open-source community with regular updates.

Capabilities

-

Event-Driven Architectures: Ideal for systems that need to react to database changes in real-time.

-

Scalability: Can handle large-scale data streaming across distributed systems.

-

Flexibility: Extensible with custom connectors and integrations.

Striim

Overview

Striim is a real-time data integration and streaming platform that includes CDC capabilities for various databases and big data platforms.

Features

-

Real-Time Data Streaming: Streams data in real-time with sub-second latency.

-

Wide Range of Connectors: Supports multiple databases, cloud platforms, and big data systems.

-

In-Memory Processing: Performs in-memory data processing for low-latency transformations.

-

Visual Interface: Offers a drag-and-drop interface for designing data flows.

-

Event Processing: Includes complex event processing capabilities.

Capabilities

-

Real-Time Analytics: Ideal for organizations requiring instant data availability for analytics.

-

Big Data Integration: Integrates with big data platforms like Hadoop and Kafka.

-

Event-Driven Applications: Supports building and deploying event-driven architectures.

Skyvia

Overview

Skyvia is a cloud-based data platform that offers a wide range of data services. This includes data integration, backup, and management. Among its features is support for Change Data Capture (CDC). It allows users to track changes in their data across various sources and sync these changes to different destinations.

Features

-

Full and Incremental Data Replication: Skyvia can replicate data from cloud apps like Salesforce to a relational database like PostgreSQL. Or replicate a relational database to another.

-

Bidirectional Synchronization: It can synchronize data between the source and target in both directions. This means it can track and apply changes both from the source to the target and vice versa. This is useful in scenarios where data needs to be kept up-to-date across multiple platforms and hybrid cloud environments.

-

Wide Number of Cloud Apps and Database Support: Supports many cloud apps like Salesforce and HubSpot. Also, databases like PostgreSQL, MySQL, and SQL Server both on-premise and in the cloud.

-

Graphical, Drag-and-Drop Interface: Skyvia also focuses on ease of use. With its Data Flow and Control Flow packages, you can create a flexible data integration pipeline with zero to minimal coding.

Capabilities

-

Ease of Use: Great for organizations who needs an easy-to-use tool without compromising flexibility.

-

Supports Data Sources with CDC Capabilities: This includes SQL Server and other databases and apps with built-in CDC.

-

On-Premise and Cross-cloud Support: Skyvia supports data sources whether it's on-premises or in the cloud.

-

Automations and Triggers: Supports event-based automation and integration.

How to Choose a Change Data Capture Tool to Meet Your Needs

When selecting a CDC tool or a tool that supports CDC, it's important to consider your specific requirements, the systems you’re working with, and your overall data strategy. Here's how to approach the decision:

1. Understand Your Use Case

-

Data Volume: Consider the volume of data changes. High-volume environments might require a more robust tool.

-

Latency Requirements: If real-time data is crucial, prioritize tools with low-latency streaming capabilities, like Debezium.

-

Data Sources: Identify your source systems (e.g., SQL Server, Oracle, cloud databases) and choose a tool that supports them natively.

2. Evaluate Integration Needs

-

Heterogeneous Environments: If you need to integrate data across multiple platforms (e.g., on-premise to cloud), choose a tool with broad support, like Skyvia.

-

Real-Time vs. Batch: Determine whether you need continuous real-time replication or if periodic batch processing suffices. Tools like Debezium excel in real-time scenarios.

3. Consider Ease of Use

-

Technical Expertise: Assess the technical skill level of your team. If your team lacks deep technical expertise, a user-friendly tool like Skyvia is preferable.

4. Scalability and Performance

-

Growth Projections: Consider your future growth. Choose tools designed to scale with growing data needs.

-

Performance Requirements: High-performance environments may benefit from tools that offer in-memory processing or advanced optimization features.

5. Cost Consideration

-

Budget: Factor in the cost, including licensing fees, operational costs, and any additional infrastructure required.

-

Total Cost of Ownership: Look beyond the initial cost to consider the total cost of ownership. This includes maintenance, support, and potential downtime costs.

6. Security and Compliance

-

Data Sensitivity: If you're dealing with sensitive data, prioritize tools with strong security features like encryption, auditing, and compliance certifications.

-

Regulatory Requirements: Ensure the CDC tool complies with industry-specific regulations (e.g., GDPR, HIPAA) if applicable. Tools like Skyvia comply with these regulations.

7. Support and Community

-

Community and Ecosystem: Open-source tools like Debezium benefit from active communities that can be a valuable resource for troubleshooting and enhancements. The rest of the tools mentioned here offer a wide variety of support options and resources.

Conclusion

Change Data Capture tracks and identifies changes to your data. It may come with challenges but your tool of choice should address any concern. Then choose the right CDC method to meet your needs.

Change Data Capture is only one aspect to address another data management requirement. So, choose a tool like Skyvia that addresses this requirement and harnesses CDC for more efficient data handling.